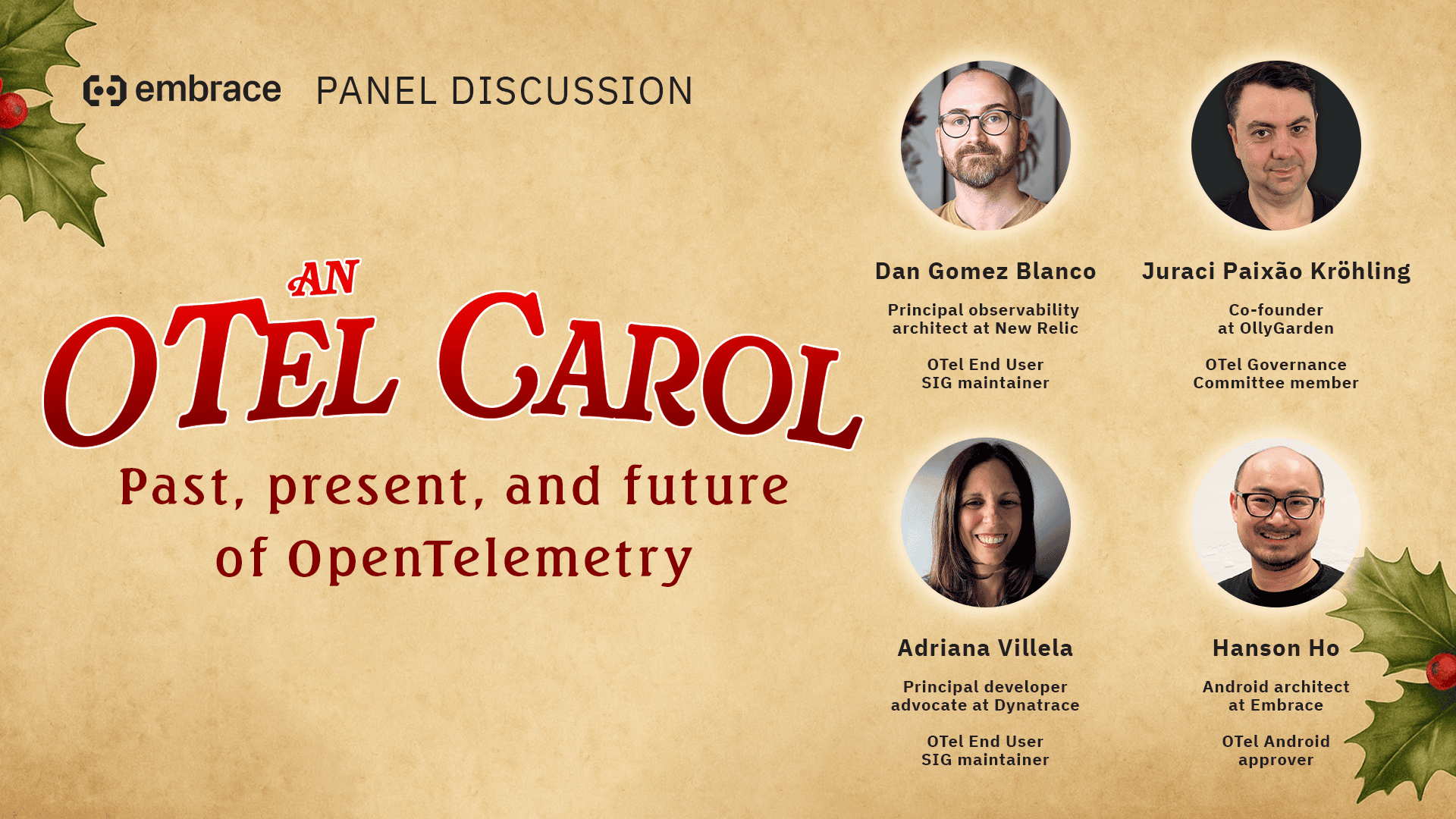

Recently, I sat down with a fun group of OTel experts to look back at all the great achievements in the OpenTelemetry project over the last year. Well, to be fair, not all of them. Have you seen how big OTel is?

Instead, we picked some of our favorites, everything from semantic conventions to tooling to new special interest groups (SIGs). And given the holiday season, we decided to share them in “A Christmas Carol”-esque journey through the past, present, and future of OpenTelemetry.

Wherever you are on your OpenTelemetry or observability journey, there’s definitely something for you in this wide-ranging discussion. Here’s just a small sample of topics we covered:

- How declarative configuration simplifies OpenTelemetry setup across your entire system

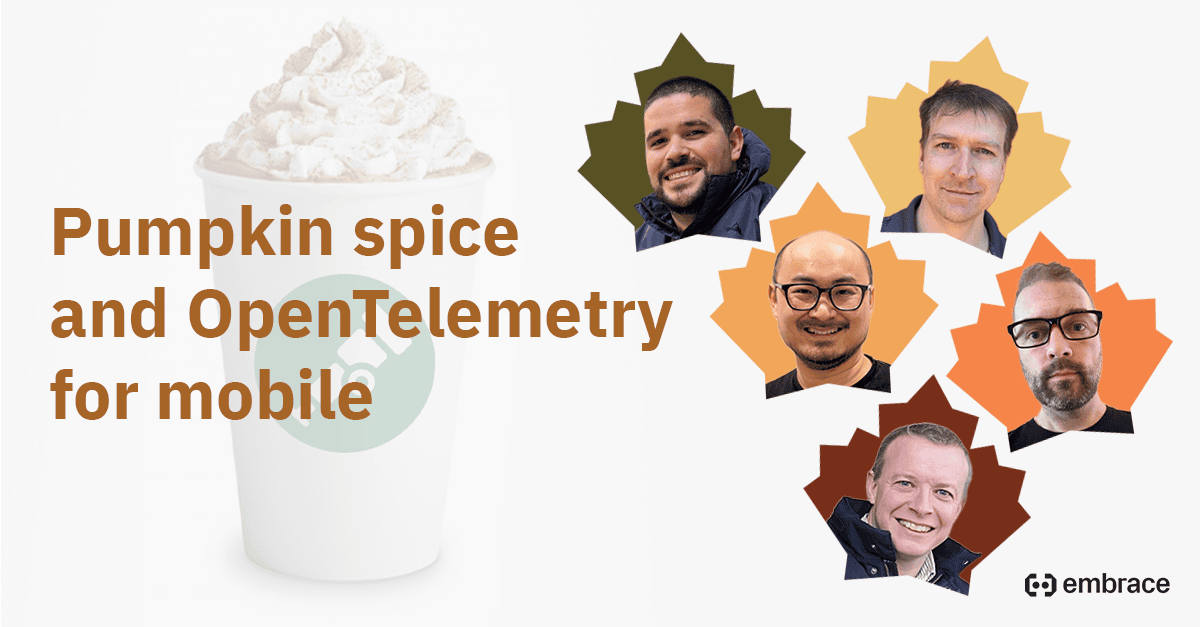

- What are the improvements in OpenTelemetry support for mobile and web

- Why Instrumentation Score helps engineering teams understand and improve telemetry quality

- Why 2026 is going to be the year of OpenTelemetry Weaver

- How community-driven contributions (like the Go compile-time instrumentation) are growing in OTel and how that creates more sustainable projects

- What’s the future of Kotlin support in OpenTelemetry

- What’s in store for AI support in OpenTelemetry

And so much more! If you want to watch people in holiday sweaters get you up to speed on the last year in OpenTelemetry, then check out the full panel below. Scroll past the video for some of the biggest highlights from our discussion as well as to access the full transcript. See you at the next one!