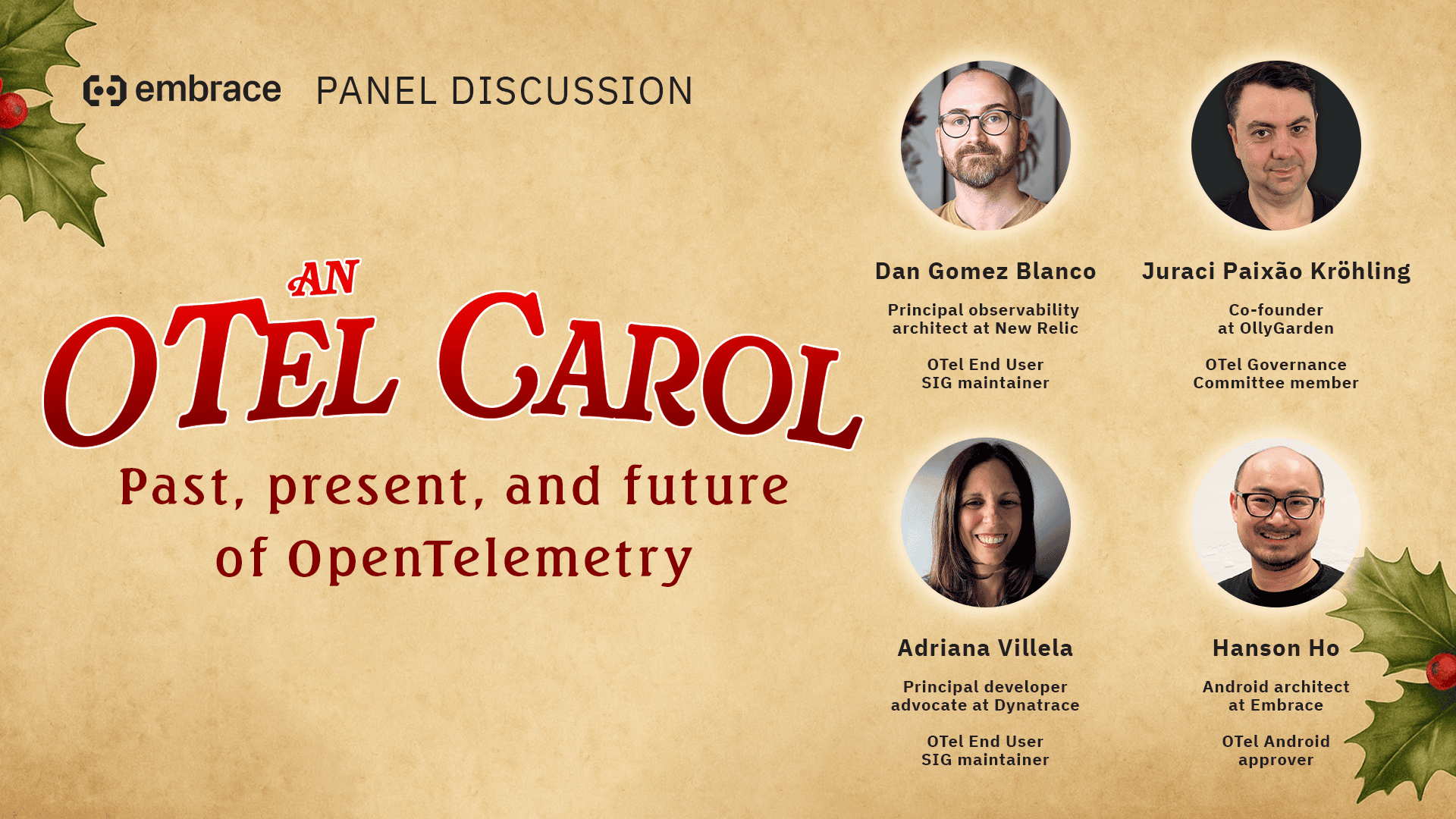

Recently, a jolly group of OTel experts and enthusiasts gathered to discuss what we loved about OpenTelemetry in 2024 and what we are most excited for with OTel in 2025.

Well, that was part of it.

We also wore some fun holiday sweaters and ran polls to learn about everyone’s favorite holiday traditions. I was surprised at some of the findings, including:

- The audience’s favorite holiday drink was mulled wine.

- The audience’s favorite holiday song was “All I Want for Christmas Is You.”

I know I was definitely more surprised by one of the panelists wearing a Popeye’s sweater – yes, Popeye’s, the fried chicken establishment – as a holiday sweater. But rest assured, there is an explanation.

Wherever you are on your OTel journey, there’s something for you in this wide-ranging discussion. Here’s just a small sample of topics we covered:

- How the end of the zero interest rate phenomenon is forcing change in observability and platform engineering strategies.

- What observability 2.0 REALLY means, and why OpenTelemetry plays such a big part.

- Key OTel improvements in developer experience, including SDK stability work, the new file config schema, and the growth of libraries using OTel-native instrumentation.

- OTel innovation happening in CI/CD observability and better interoperability with Prometheus.

- Improving OTel support for client-side observability with entities and resource providers.

Check out the full panel below, and scroll past the video to see a few of the best quotes from our discussion as well as to access the full transcript. And, of course, have a happy holidays and an even happier new year!