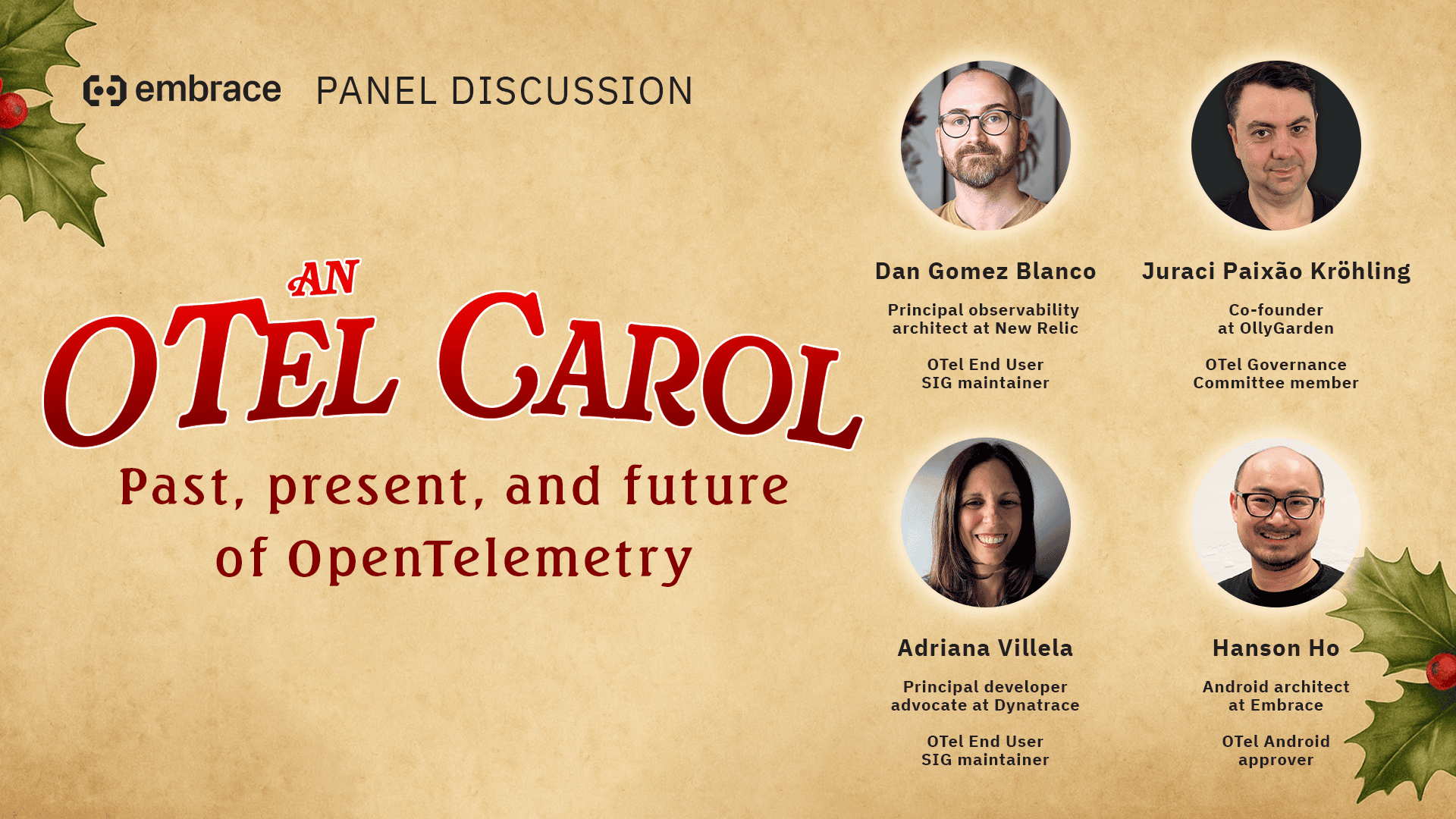

Recently, a fun group of OTel experts and enthusiasts gathered to share their love for OpenTelemetry and observability.

Since the event happened during the week of Valentine’s Day, we also decked ourselves out in red and pink clothing and ran some love-themed polls. Because let’s be honest, when love is involved, subtlety goes right out the window. Here are some of the findings from our polls:

- The audience’s favorite way to say “I love you” to the terminal was:

echo “I love you”. - When asked to play “Fling, Marry, Kill” for logs, metrics, and traces, the audience universally wanted to kill logs. However, our panelists were split on this decision, with three members agreeing with the audience, but two members opting to kill metrics instead.

- The audience’s favorite secret crush (i.e., a software skill they’d like to learn more about) was machine learning and AI. However, none of our panelists were tempted by those sweet LLMs.

Also, fair warning that at one point polyamory was mentioned in reference to observability, and how we should be going beyond o11y, to po11y, when building the future of observability.

Wherever you are on your OpenTelemetry or observability journey, there’s definitely something for you in this wide-ranging discussion. Here’s just a small sample of topics we covered:

- How getting started with OpenTelemetry can be a challenge, including some pros and cons to relying on auto-instrumentation as a first step.

- Why the community is so important when it comes to OpenTelemetry, and why you should share feedback with the SIGs and contribute back wherever possible.

- What our Observability Prince Charming looks like, including greater support for OTel-native instrumentation, easier ways to connect OTel components together, and diverse options at the SDK and API levels without compromising on interoperability.

- Where AI can provide the most value when it comes to observability.

- What some good approaches are for migrating legacy apps to OpenTelemetry.

Check out the full panel below and scroll past the video to see a few of the best quotes from our discussion as well as to access the full transcript. See you at the next one!