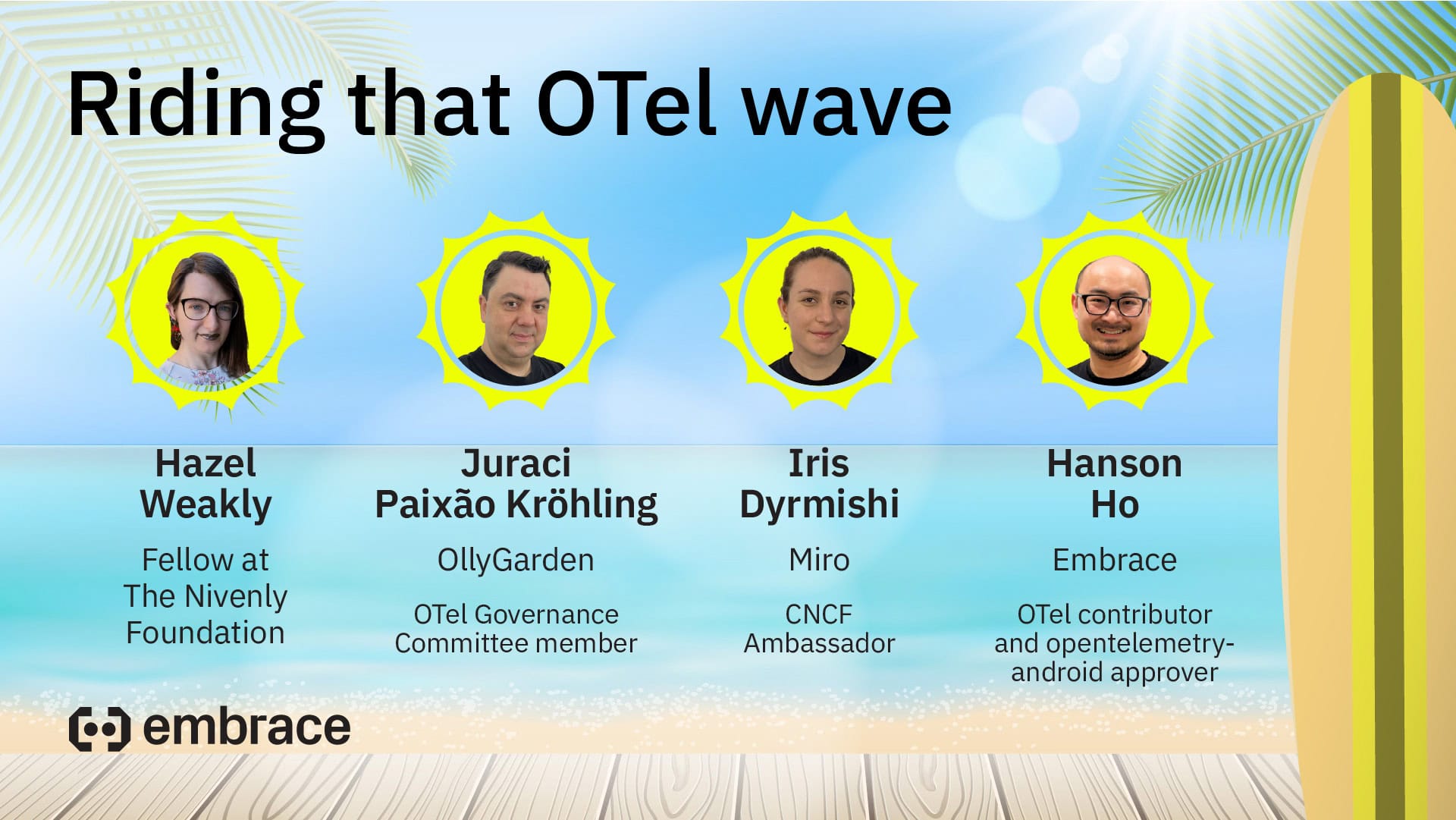

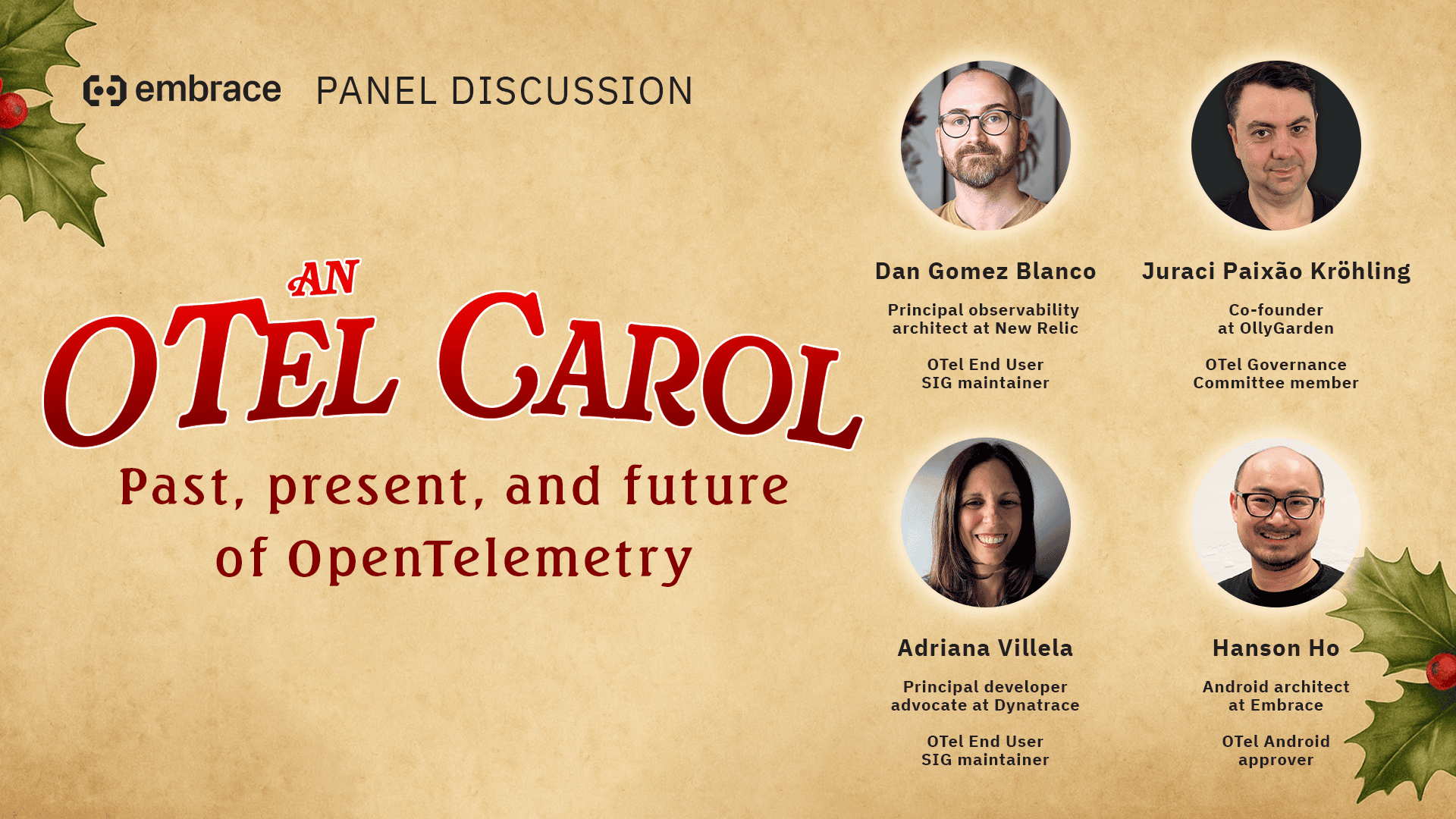

Recently, a fun group of OTel experts and enthusiasts gathered for two reasons – getting ready for summer and chatting about how we can improve observability and make it easier to understand what’s going on with our software systems. (You can watch the full video here.)

In other words, we wanted to help you learn how to hang ten on some sweet OTel and observability waves. I guess we could also call it “ob-surf-ability.”

I’ll wait here for you to recover from that facepalm.

Wherever you are on your OpenTelemetry or observability journey, there’s definitely something for you in this wide-ranging discussion. Here’s just a small sample of topics we covered:

- What are some strategies for large engineering teams to successfully get started with OpenTelemetry

- Why observability engineers must highlight the value of using OpenTelemetry to developers and not just senior leadership

- Why you should strive for purposeful instrumentation in your telemetry (and yes, there are many reasons beyond reducing costs)

- What are tools and architecture approaches to improve how you work with the OTel Collector

- Why collecting telemetry in the OTel data format is so hard for mobile and web apps

- If OTel for mobile was a sandwich, what are the condiments, meat, and bread? (Yes, this was talked about.)

If you’d like a sneak peek at some of the highlights, read on! We’ve got key panelist quotes, favorite answers to questions, mic drop moments, OTel resources, and more. If you’d prefer to check out the video instead, you can watch the full panel discussion here.

Hope you enjoy it, and we’ll see you at the next one!