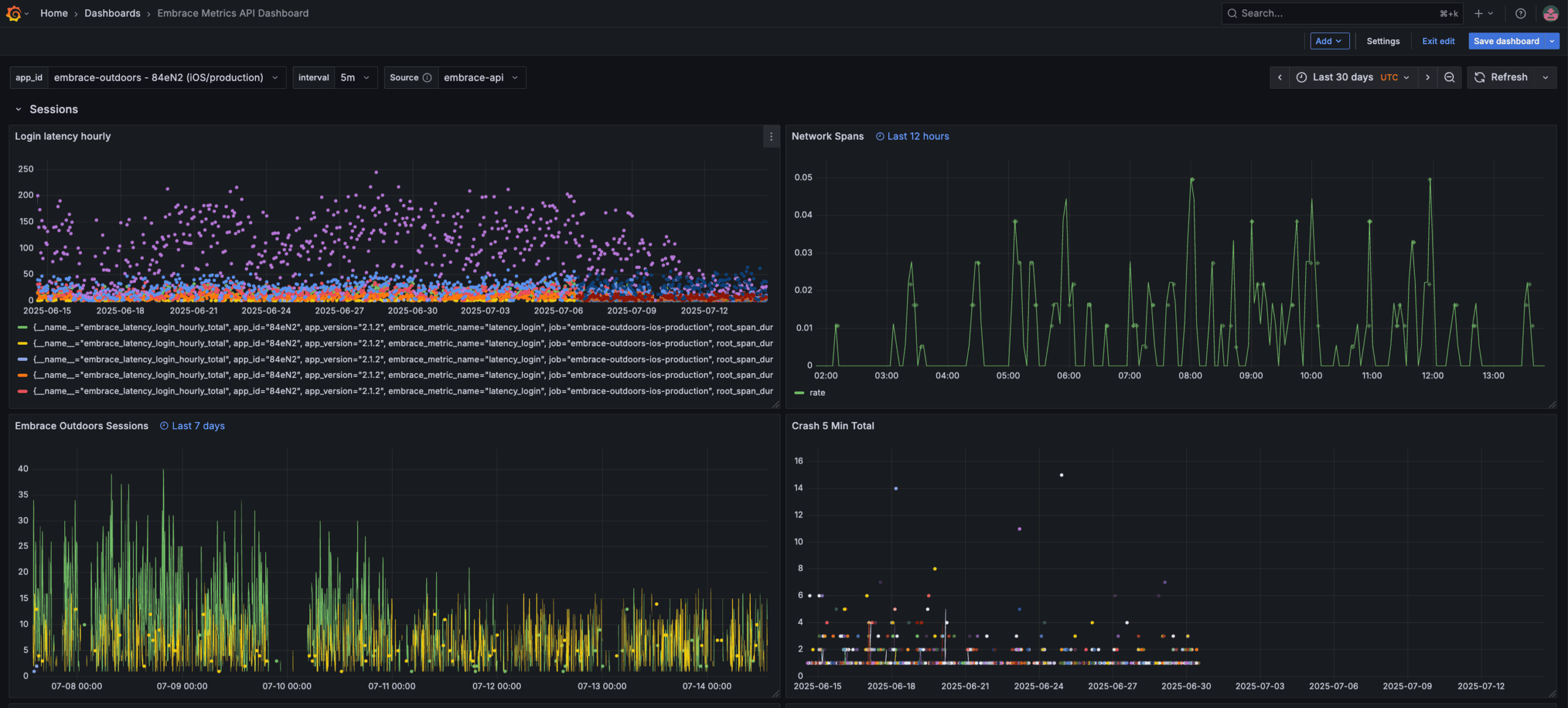

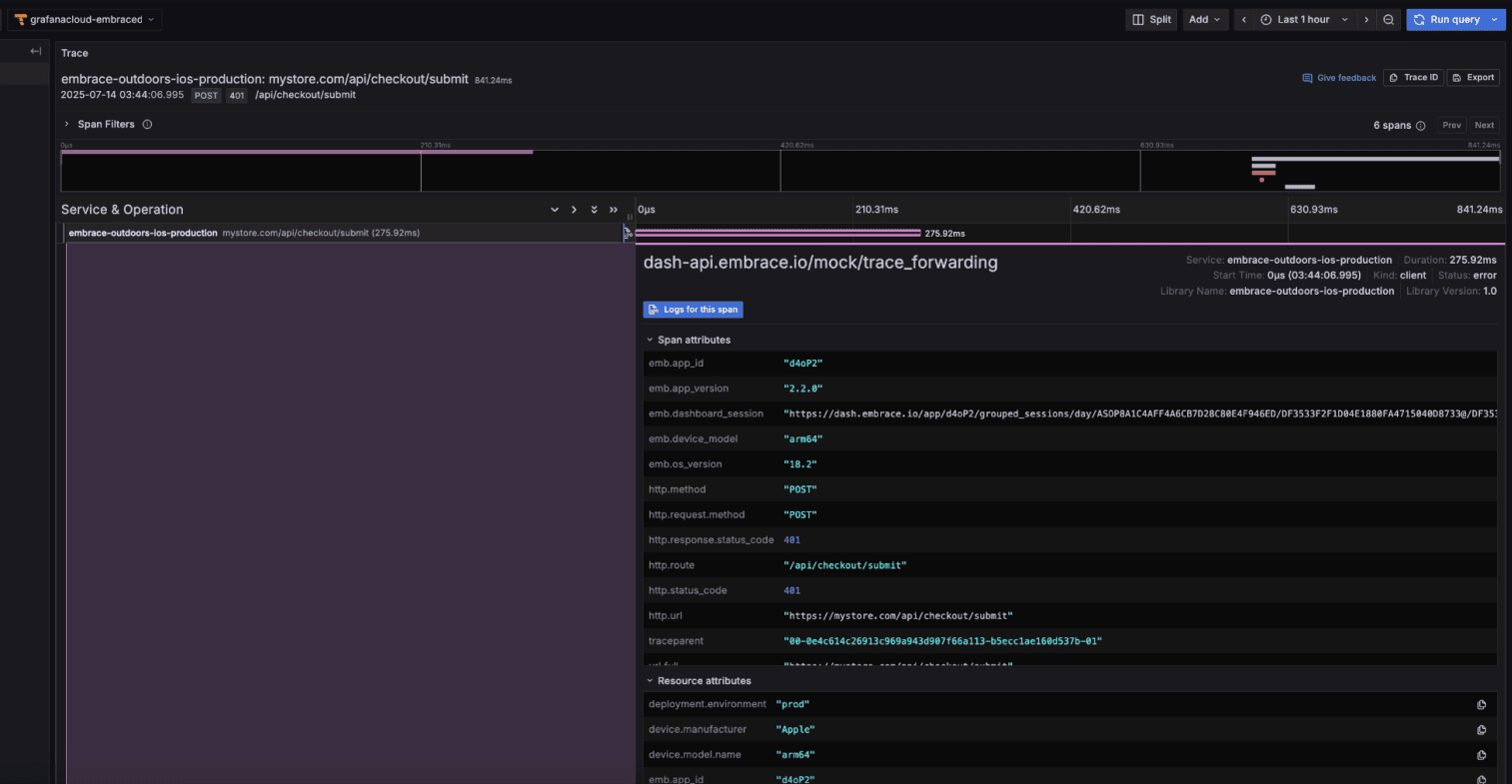

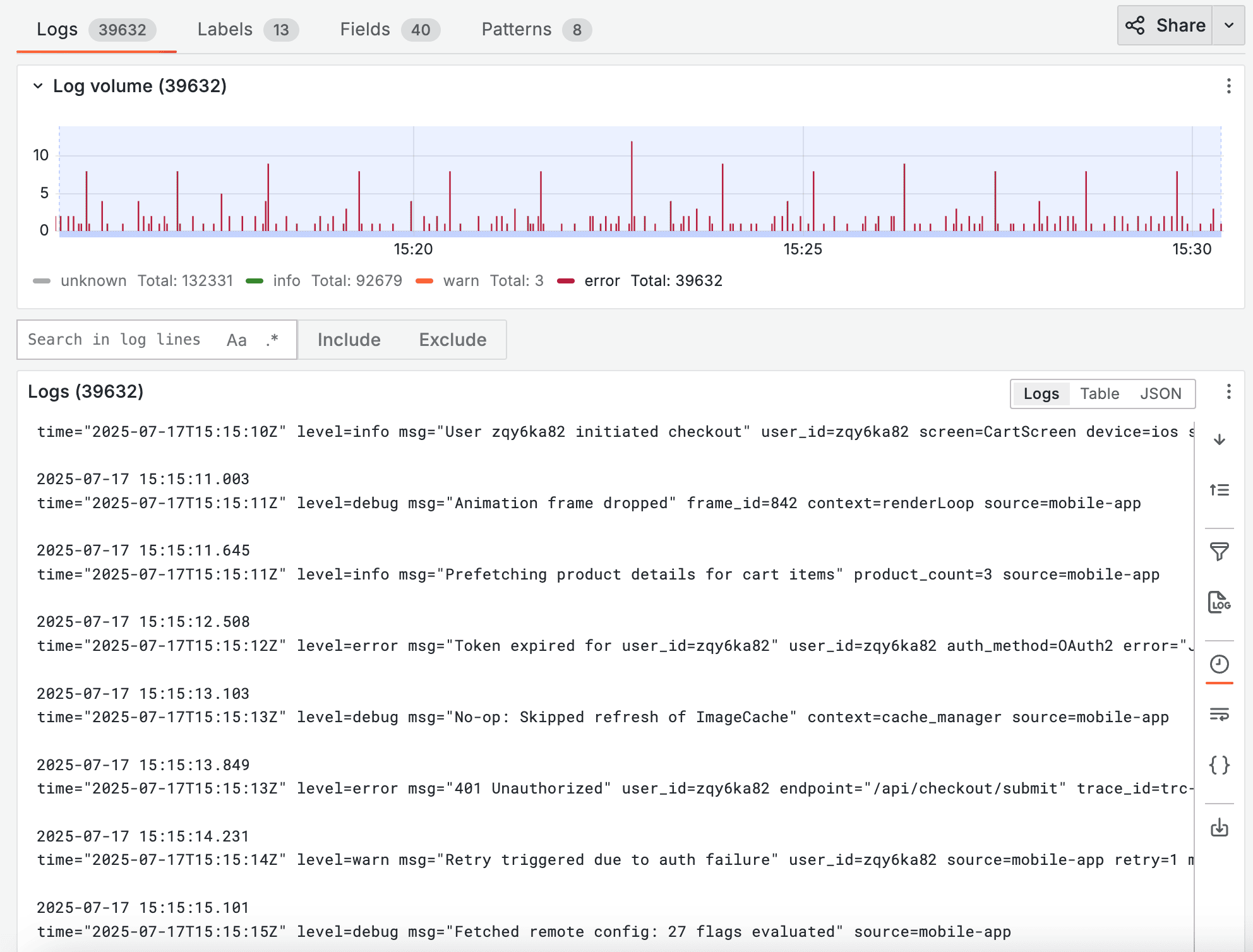

Mobile applications present unique observability challenges that general-purpose platforms weren’t designed to handle. While tools like Grafana offer powerful capabilities for backend systems, they aren’t quite as useful when it comes to debugging mobile-specific issues. This post examines a real-world troubleshooting scenario to illustrate the fundamental differences between traditional observability approaches and user-centric solutions.